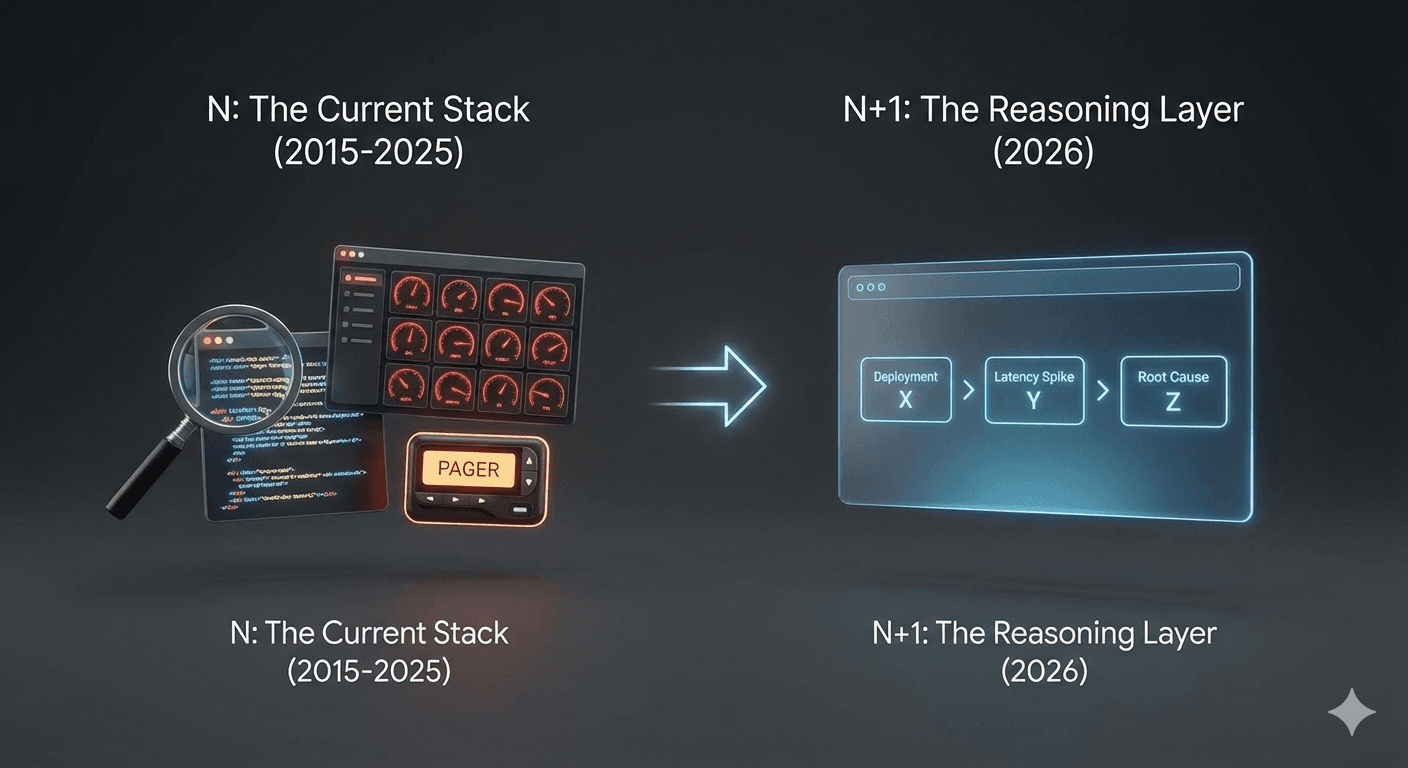

For more than a decade, reliability engineering focused on building N.

- Dashboards to visualize metrics

- Logs to reconstruct events

- Traces to follow requests

- Alerts, runbooks, and escalation paths to pull humans into the loop

The premise was simple and largely correct: if engineers can see what the system is doing, they can fix it faster. Modern observability succeeded at that goal. Most teams today are not blind to their systems. That assumption was reasonable but incomplete.

Most modern teams do not fail because data is missing. They fail because the system has grown too complex for humans to reason about consistently under pressure. Signals exist, but understanding lags behind. Uptime becomes reactive not because teams are careless, but because cognition does not scale the same way infrastructure does.

This is where the idea of N+1 enters not as “one more tool,” but as a missing capability.

What “N” Solved and Where It Stopped

The existing reliability stack is excellent at observation. It can tell you what is unhealthy, where latency increased, which service is throwing errors, and when something crossed a threshold.

What it does not do is connect those facts into a single explanation.

- It doesn’t tell you why this alert matters more than the other ten

- It doesn’t connect a deployment change from earlier in the day to a downstream symptom hours later

- It doesn’t remember that a similar incident last quarter had the same root cause

That work is still done by humans usually senior engineers, who carry the system’s mental model in their heads. As systems scale, that human reasoning layer becomes the bottleneck. Not because engineers are bad, but because distributed systems exceed what humans can reliably reason about under pressure.

This is the gap the N+1 layer is meant to fill.

The Reality of the AI SRE Space

The AI SRE space is already crowded. Startups, incumbents in observability and APM, and platform vendors are all experimenting with AI-driven analysis. The slow adoption is not due to lack of interest it’s due to the nature of reliability itself. Reliability is unforgiving. A system that is occasionally wrong, slow, or inconsistent might be acceptable in analytics or developer tooling. In incident response, it’s disqualifying.

That’s why AI SRE has behaved less like a feature rollout and more like category creation. Categories don’t win on demos. They win when enough real systems run through them and come out measurably better.

So far, that bar has been hard to clear. If you're evaluating the top AI SRE tools available in 2026, understanding what makes a true N+1 layer is essential.

What 2025 Actually Got Right and What It Didn’t

The 2025 AI wave did deliver real improvements.

- Summarization reduced noise

- Alert grouping helped teams triage faster

- Log and trace explanations made onboarding easier

These capabilities mattered and they continue to matter.

But they addressed reading, not deciding.

At the same time, many tools tried to reframe existing workflows as conversations. Dashboards and filters were exposed through chat interfaces. This worked well in exploratory contexts and demos, but struggled as a primary interface during incidents, where engineers rely on rapid visual pattern recognition across familiar views.

The core issue wasn’t “chat versus clicks.” It was that most systems stopped short of producing conclusions.

- Summarization helps you understand data faster

- Reasoning helps you know what to do next

The promised +1 was always about the second step.

So What Does the Right N+1 Actually Do?

A real N+1 is not a UI and not a chatbot. It is a reasoning system that sits above observability. Concretely, it does four things that existing stacks do not. It is important to understand what AI SRE fundamentally addresses and why this reasoning layer is now possible.

1. Builds and Maintains a System Model

This model encodes service dependencies, deployment topology, ownership boundaries, and known failure paths. It does not infer relationships on the fly during an incident; it already knows how the system is wired.

2. Correlates Signals Causally

Instead of noticing that errors and latency rose together, it reasons that a deployment changed configuration in Service A, which increased load on Service B, which exhausted a shared resource downstream. This reasoning is deterministic and repeatable.

3. Accumulates Incident Memory

When a similar failure occurs months later, the system does not start from scratch. It knows which hypotheses were false last time, which signals were misleading, and which actions actually resolved the issue.

4. Separates Reasoning from Explanation

The conclusions are produced by structured logic and stored state. Language is merely how those conclusions are communicated to humans.

This is what people mean often vaguely when they say “AI that grows from intern to senior engineer.” It does not get smarter because the model changed. It gets smarter because the system learned your system.

Why Reliability of the N+1 Matters More Than Its Intelligence

There’s a constraint that filters out most AI SRE experiments: the N+1 must work when everything else is already failing.

This is where many open-source and early-stage systems struggle. Not because they’re poorly built, but because they inherit fragile dependencies expiring credentials, external model latency, lost state on restarts, or non-deterministic outputs. In reliability workflows, these aren’t edge cases. They’re deal-breakers.

A useful comparison is PagerDuty. PagerDuty isn’t sophisticated. It doesn’t diagnose anything. It does one thing escalation and it does it reliably under stress. That dependability is why teams trust it with their worst moments.

Any N+1 layer is judged by the same standard. If it needs babysitting, fallbacks, or manual verification during incidents, it won’t be used when it matters.

Why Evaluation Feels So Hard

Evaluating AI SRE is uniquely uncomfortable. Unlike dashboards, these systems often require broad read access across infrastructure, logs, traces, and deployment systems. Security teams worry about over-permissioned agents. Platform teams worry about introducing a new dependency into the incident path.

At the same time, demos tend to look better than reality. Summaries are polished. Explanations sound confident. But without long-running customer stories, teams struggle to answer the only question that matters:

Will this still work on our worst day?

Until enough organizations can point to reduced MTTR, fewer escalations, and faster recovery in complex environments, skepticism is rational. The absence of widely cited case studies is not a marketing failure. It is a sign that the category is still maturing. For a detailed comparison of how different tools approach this challenge, see how established platforms and new AI SRE tools stack up in practice.

In reliability, belief follows repetition. A system must be correct many times, quietly, before it is trusted once loudly.

Do You Even Need an N+1?

Not every team does.

- If your system is simple and observability is clean, humans still scale

- If your system is complex but stable, runbooks and discipline often outperform discovery

- If fundamentals are missing, AI SRE is premature

The N+1 becomes necessary only when unpredictability itself becomes expensive when it slows releases, burns out engineers, and erodes confidence even when production is healthy and incidents spill into product and customer trust.

At that point, the cost is no longer downtime, organizational drag is. The organizational drag and engineer burnout from constant firefighting is exactly when N+1 tools deliver the most value.

What N+1 Changes When It Works

When a real N+1 is in place, teams stop asking “What is broken?” and start asking “What changed?” Incident response shifts from exploration to confirmation. Escalations become rarer. Releases feel safer because failures are explainable. Over time, reliability becomes boring not because nothing breaks, but because breakage is understood.

That is predictable uptime.

N+1 Is Not a Tool Choice

In 2026, predictable uptime will not come from better dashboards or smarter summaries. It will come from introducing a reasoning layer that understands your system, remembers your past, and operates reliably under stress.

That layer will not be adopted because it is impressive. It will be adopted because, over time, teams notice they rely on it and nothing breaks when they do.

That is what N+1 really means. Not one more tool in the stack, but the missing capability that turns uptime from accidental into predictable.